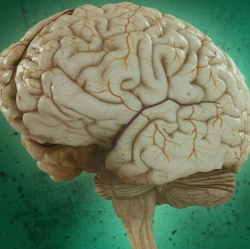

Harvard University has been awarded $28 million (£19m) to investigate why brains are so much better at learning and retaining information than artificial intelligence. The award, from the Intelligence Advanced Projects Activity (IARPA), could help make AI systems faster, smarter and more like human brains.

While some computers have a comparable storage capacity, their ability to recognise patterns and learn information does not match the human brain. But a better understanding of how neurons are connected could help develop more complex artificial intelligence.

Most neuroscientists estimate that the ‘storage capacity’ for the human brain ranges between 10 and 100 terabytes, with some evaluations putting the number at close to 2.5 petabytes. In terms of functionality, the human brain has a huge range, data analysis, pattern recognition and an ability to learn and retain information are just a few processes the brain goes through on a daily basis.

A human being only need look at a car a few times to recognise one, but an AI system may need to process hundreds or even thousands of samples before it’s able to retain the information. Conversely, while functionality may be more complex and varied, the human brain is unable to process the same volume of data as a computer.

Harvard’s John A. Paulson School of Engineering and Applied Sciences (SEAS), Centre for Brain Studies (CBS) and Department of Molecular and Cellular Biologies will work together to record activity inside the visual cortex.

This, they hope, will help us understand how neurons are connected to each other with the end goal of creating a more accurate and complex artificial intelligence system. These learnings could allow the creation of the first computer systems that can interpret, analyse and learn information as quickly and successfully as human beings.

The systems could be used to detect network invasions, read MRIs, drive cars, or complete just about any task normally reserved for human brains. The project will generate over a petabyte of data, which will be analysed by a series of complex algorithms to create a detailed 3D neural map.

"The pattern-recognition and learning abilities of machines still pale in comparison to even the simplest mammalian brains," said Hanspeter Pfister, professor of computer science at Harvard.

"The project is not only pushing the boundaries of brain science, it is also pushing the boundaries of what is possible in computer science. We will reconstruct neural circuits at an unprecedented level from petabytes of structural and functional data. It requires us to make new advances in data management, high-performance computing, computer vision and network analysis".

Once the detailed neural map has been collated, the teams will try to understand exactly how connections between neurons allows the system to process information. These ‘biologically-inspired’ algorithms will far outperform current AI capabilities, and could see major improvements in computer vision, navigation and recognition.

"This is a moonshot challenge," said project leader David Cox, assistant professor of molecular and cellular biology and computer science. "The scientific value of recording the activity of so many neurons and mapping their connections alone is enormous, but that is only the first half of the project.

"As we figure out the fundamental principles governing how the brain learns, it’s not hard to imagine that we’ll eventually be able to design computer systems that can match, or even outperform, humans."

“We have a huge task ahead of us in this project, but at the end of the day, this research will help us understand what is special about our brains," he added. "One of the most exciting things about this project is that we are working on one of the great remaining achievements for human knowledge, understanding how the brain works at a fundamental level."