“Bias” in AI is often treated as a dirty word. But to Dr. Andreas Tolias at the Baylor College of Medicine in Houston, Texas, bias may also be the solution to smarter, more human-like AI.

I’m not talking about societal biases—racial or gender, for example—that are passed onto our machine creations. Rather, it’s a type of “beneficial” bias present in the structure of a neural network and how it learns. Similar to genetic rules that help initialize our brains well before birth, “inductive bias” may help narrow down the infinite ways artificial minds develop; for example, guiding them down a “developmental” path that eventually makes them more flexible.

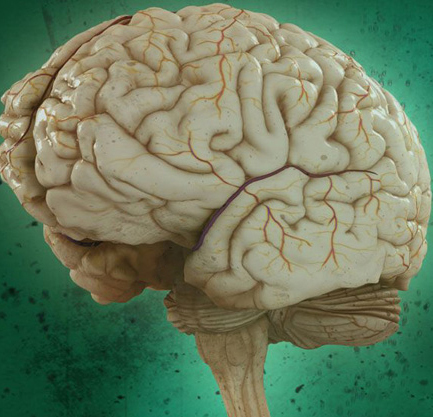

It’s not an intuitive idea. Unconstrained by evolution, AI has the potential to churn through vast amounts of data to surpass our puny, fatty central processors. Yet even as a single algorithm beats humans in a specific problem—chess, Go, Dota, medical diagnosis for breast cancer—humans kick their butts every time when it comes to a brand new task.

Somehow, the innate structure of our brains, when combined with a little worldly experience, lets us easily generalize one solution to the next. State-of-the-art deep learning networks can’t.

In a new paper published in Neuron, Tolias and colleagues in Germany argue that more data or more layers in artificial neural networks isn’t the answer. Rather, the key is to introduce inductive biases—somewhat analogous to an evolutionary drive—that nudge algorithms towards the ability to generalize across drastic changes.

“The next generation of intelligent algorithms will not be achieved by following the current strategy of making networks larger or deeper. Perhaps counter-intuitively, it might be the exact opposite…we need to add more bias to the class of [AI] models,” the authors said.

What better source of inspiration than our own brains?

Moravec’s Paradox

The problem of AI being a one-trick pony dates back decades, long before deep learning took the world by storm. Even as Deep Blue trounced Kasparov in their legendary man versus machine chess match, AI researchers knew they were in trouble. “It’s comparatively easy to make computers exhibit adult-level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility,” said computer scientist Hans Moravec, who famously articulated the idea that bears his name along with Marvin Minsky and others.

Simply put: AIs can’t easily translate their learning from one situation to another, even when they’ve mastered a single task. The paradox held true for hand-coded algorithms; but it remains an untamed beast even with the meteoric rise of machine learning. Splattering an image with noise, for example, easily trips up a well-trained recognition algorithm. Humans, in contrast, can subconsciously recognize a partially occluded face at a different angle and under different lighting, or snow and rain.

The core problem, explained the authors, is that whatever features the deep network is extracting don’t capture the whole scene or context. Without a robust “understanding” of what it’s looking at, an AI falters even with the slightest perturbation. They just don’t seem to be able to integrate pixels across long ranges to distill relationships between them—like piecing together different parts of the same object. Our brains process a human face as a whole face, rather than eyes, nose, and other individual components; AI “sees” a face through statistical correlations between pixels.

It’s not to say that algorithms don’t show any sort of transfer in their learned skills. Pre-training deep networks on ImageNet, a giant depository of images of our natural world, to recognize one object can be “surprisingly” beneficial for all kinds of other tasks.

Yet this flexibility alone isn’t a silver bullet, the authors argue. Without a slew of correct assumptions to guide the deep neural nets in their learning, “generalization is impossible.”