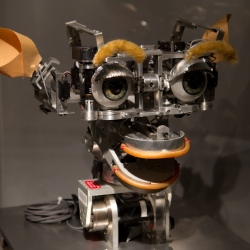

Robots can see as well (and sometimes better) than can with their fancy 3D cameras, but that doesn’t necessarily mean that they understand what they’re seeing. Without good object recognition software, that robot butler you’ve been dreaming about won’t be able to distinguish between a bottle of Drano and a pitcher of orange juice. That just won’t do for a thirsty human, so the folks at MIT are working on improving the way robots see.

Lead by grad student Jared Glover, a group at MIT has refined existing algorithms to give robots an even better chance at not accidentally poisoning their human masters. Based on a statistical construct known as the Bingham distribution, Glover’s new algorithm allows robots to not only see objects, but to identify their orientation as well.

It’s all very mathematically complex, but Glover’s algorithm has boosted robot sight 15 percent closer to the way we humans process visual data than anything else out there. A full 84 percent of the objects that the robots using Glover’s system saw were identified correctly. Objects piled up on top of one another presented a slightly more difficult problem for the software, but it still identified the jumbled-up objects 73 percent of the time.