Whenever we smile or frown, or express any number of emotions using our faces, we move a large number of muscles in a complex manner. While we’re not conscious of it, when you’re looking at a facial expression, there’s a whole part of our brains that deals with decoding the information conveyed by the muscles.

Now, researchers at the Ohio State University have worked to pinpoint exactly where in the brain that processing occurs. To do so, they turned to a method called functional resonance imaging or fMRI, a system that detects increased blood flow in the brain, indicating which part has been activated.

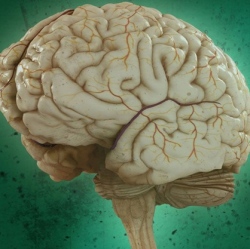

Ten students were placed in an fMRI machine, and showed more than 1,000 photographs of people making facial expressions, each of which corresponded to one of seven emotional categories. Throughout the test, all participants showed increased activity in the same region of the brain, known as the posterior superior temporal sulcus (pSTS). This confirms that that region, located behind the ear on the right side of the brain, is responsible for recognizing facial expressions, but the data allowed the team to dig a little deeper.

By cross referencing the fMRI images with the muscle movements of each expression, the team was able to create a map of smaller regions within the pSTS that are activated by the movement of certain muscles. This data was used to create a machine learning algorithm that’s able to identify the facial expression that an individual is looking at based purely on the fMRI data.

They tested the system quite extensively, creating maps from the data of nine of the participants, feeding the fMRI images from the 10th student to the algorithm, which was able to accurately identify the expression around 60 percent of the time. To confirm the results, the experiment was repeated, creating the maps from scratch and using a different student to view the expressions.

The study essentially confirms that the mechanisms that we use to identify facial expressions are very similar from one person to the next.

"This work could have a variety of applications, helping us not only understand how the brain processes facial expressions, but ultimately how this process may differ in people with autism for example," said study co-author Julie Golomb.

This new work isn’t the only recent breakthrough related to how we view faces. Earlier this month, University of Montreal researchers announced the results of a study that confirmed that Alzheimer’s patients actually loose the ability to holistically view faces, which is thought to be the means by which we recognize loved ones.